Confident AI Observability

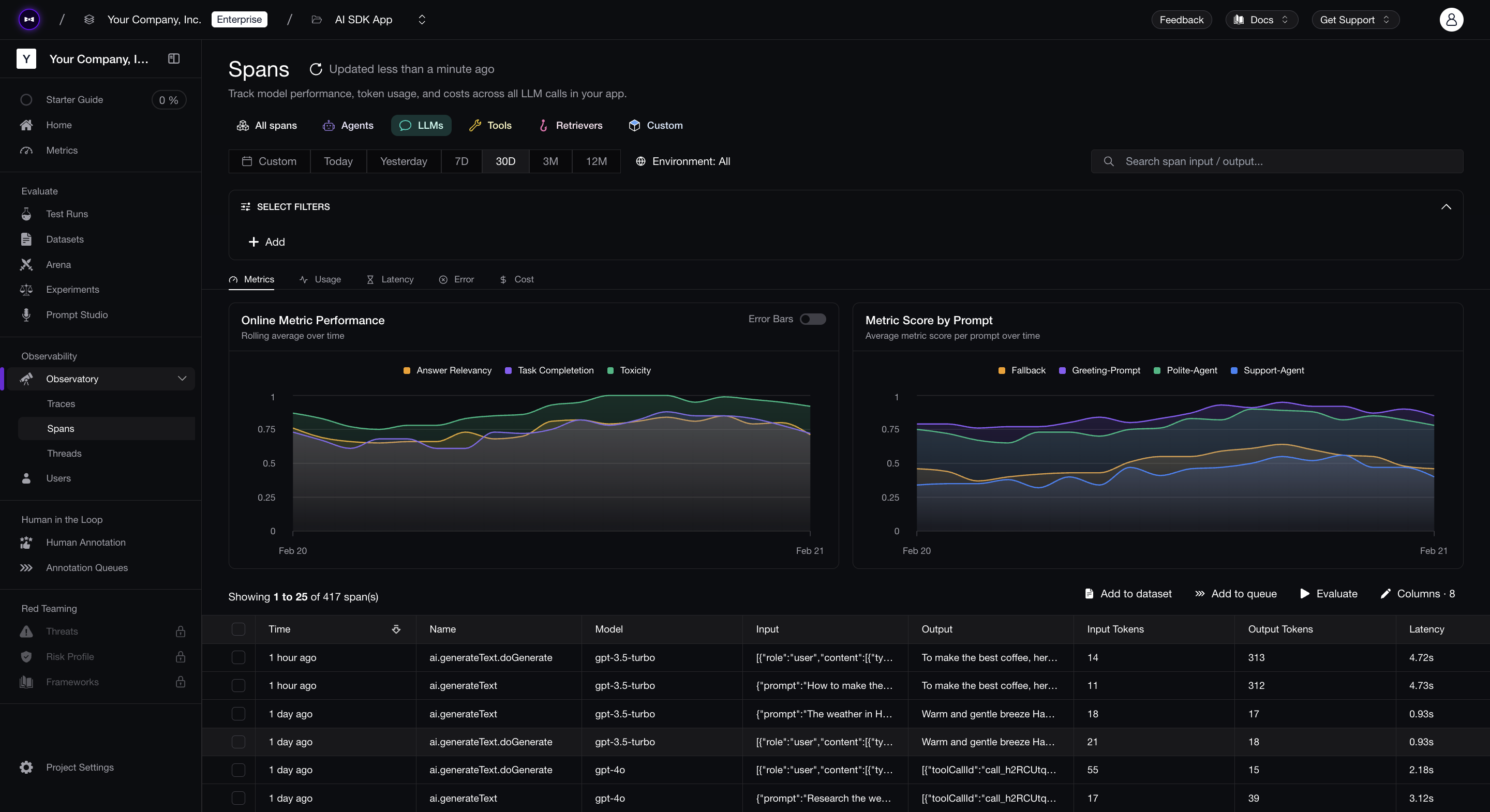

Confident AI is an LLM observability and evaluation platform for teams to build reliable AI applications in both development and production.

The deepeval-ts package integrates with the AI SDK's experimental_telemetry API to provide tracing, online evaluations, and session analytics.

Setup

To enable tracing, install deepeval-ts, configure your API key, and initialize a tracer using configureAiSdkTracing.

1. Install deepeval-ts

npm install deepeval-ts2. Set Environment Variables

Sign up or log in to Confident AI to get your API key, then set it as an environment variable:

CONFIDENT_API_KEY="YOUR-PROJECT-API-KEY"3. Configure Tracing

Import and call configureAiSdkTracing to create a tracer:

import { configureAiSdkTracing } from 'deepeval-ts';

const tracer = configureAiSdkTracing();Tracing Your Application

You can now pass the tracer object into the experimental_telemetry field of any AI SDK call to get your traces on the Confident AI platform.

Here are some of the examples on how to trace various AI SDK functions using Confident AI's tracer:

Generate text

import { generateText } from 'ai';import { openai } from '@ai-sdk/openai';import { configureAiSdkTracing } from 'deepeval-ts';

const tracer = configureAiSdkTracing();

const { text } = await generateText({ model: openai('gpt-4o'), prompt: 'What are LLMs?', experimental_telemetry: { isEnabled: true, tracer, },});Stream text

import { streamText } from 'ai';import { openai } from '@ai-sdk/openai';import { configureAiSdkTracing } from 'deepeval-ts';

const tracer = configureAiSdkTracing();

const result = streamText({ model: openai('gpt-4o'), prompt: 'Invent a new holiday and describe its traditions.', experimental_telemetry: { isEnabled: true, tracer, },});

for await (const textPart of result.textStream) { console.log(textPart);}Generate text with tool calls

import { generateText, tool, stepCountIs } from 'ai';import { openai } from '@ai-sdk/openai';import { configureAiSdkTracing } from 'deepeval-ts';import { z } from 'zod';

const tracer = configureAiSdkTracing();

const result = await generateText({ model: openai('gpt-4o'), tools: { weather: tool({ description: 'Get the weather in a location', inputSchema: z.object({ location: z.string().describe('The location to get the weather for'), }), execute: async ({ location }) => ({ location, temperature: 72 + Math.floor(Math.random() * 21) - 10, }), }), }, stopWhen: stepCountIs(5), prompt: 'What is the weather in San Francisco?', experimental_telemetry: { isEnabled: true, tracer, },});Generate structured output

import { generateObject } from 'ai';import { openai } from '@ai-sdk/openai';import { configureAiSdkTracing } from 'deepeval-ts';import { z } from 'zod';

const tracer = configureAiSdkTracing();

const { object } = await generateObject({ model: openai('gpt-4o'), schema: z.object({ recipe: z.object({ name: z.string(), ingredients: z.array(z.object({ name: z.string(), amount: z.string() })), steps: z.array(z.string()), }), }), prompt: 'Generate a lasagna recipe.', experimental_telemetry: { isEnabled: true, tracer, },});The following examples show traces generated from the snippets above:

Configuration

You can customize trace grouping and evaluation behavior by passing options to configureAiSdkTracing. This allows you to:

- Group related traces (for example, chat sessions)

- Associate prompt versions with traces

- Enable online evaluation at span and trace levels

Setting Trace Attributes

You can pass attributes like name, threadId, userId and environment to make it easier to find and filter your traces.

import { generateText } from 'ai';import { openai } from '@ai-sdk/openai';import { configureAiSdkTracing } from 'deepeval-ts';

const tracer = configureAiSdkTracing({ name: 'AI SDK Confident AI Tracing', threadId: 'thread-123', userId: 'user-456', environment: 'production',});

const { text } = await generateText({ model: openai('gpt-4o'), prompt: 'How do you make the best coffee?', experimental_telemetry: { isEnabled: true, tracer: tracer, },});Log Managed Prompts

If you use Confident AI Prompt Management, you can associate traces with a specific prompt version. Pass a Prompt object to configureAiSdkTracing to associate your traces with the prompt version used at runtime.

import { generateText } from 'ai';import { openai } from '@ai-sdk/openai';import { configureAiSdkTracing, Prompt } from 'deepeval-ts';

const prompt = new Prompt({ alias: 'my-prompt-alias' });await prompt.pull();

const tracer = configureAiSdkTracing({ confidentPrompt: prompt,});

const { text } = await generateText({ model: openai('gpt-4o'), prompt: 'How do you make the best coffee?', experimental_telemetry: { isEnabled: true, tracer: tracer, },});Logging prompts allows you to monitor what prompts are running in production and which ones are performing best overtime:

Make sure to pull the prompt before passing it to

configureAiSdkTracing. Without pulling first, the prompt version will not be

visible on Confident AI.

Online Evaluations

Confident AI supports automatic online evaluation of your traces by passing a metric collection defined in your project. To enable online evaluations:

- Create a metric collection in the Confident AI platform

- Pass your metric collection name in the

configureAiSdkTracingoptions - You can pass different metric collections for trace, LLM span and tool span levels

Here's an example of how to attach metric collections to your traces:

import { generateText } from 'ai';import { openai } from '@ai-sdk/openai';import { configureAiSdkTracing } from 'deepeval-ts';

const tracer = configureAiSdkTracing({ metricCollection: 'my-trace-metrics', llmMetricCollection: 'my-llm-metrics', toolMetricCollection: 'my-tool-metrics',});

const { text } = await generateText({ model: openai('gpt-4o'), prompt: 'How do you make the best coffee?', experimental_telemetry: { isEnabled: true, tracer: tracer, },});All incoming traces will now be evaluated automatically. Evaluation results are visible in the Confident AI Observatory alongside your traces.

You can find a more comprehensive guide on AI SDK tracing with deepeval-ts in the Confident AI docs here.